Data generation is very important aspect of software development.

It helps developer understand application behaviour with future data grow and improve performance of database queries or apply caching mechanism in application.

I used one of the commercial products for data generation in a past. It was RedGate - pretty awesome tool.

Now, if you have license for the Visual Studio 2010 Ultimate edition, you are able to use built in tool for data generation. Only Ultimate edition has this feature and it is part of the database project.

In this sample I will use new database with 2 tables - product and poductcategory.

In real life these tables are very small. I would use orders or something like that.

I just want show you how to use data generation on a table with foreign keys.

Here are steps to generate data:

1)

First of all, Prepare SQL statements to create tables.

This script you will run only once.

Another script will clean-up generated data and you will execute it many times.

--create tables:

CREATE TABLE [dbo].[Product](

[ProductId] [int] IDENTITY(1,1) NOT NULL,

[SKU] [nvarchar](50) NULL,

[ProductName] [nvarchar](100) NOT NULL,

[Price] [money] NULL,

[ProductTypeId] [int] NULL,

CONSTRAINT [PK_Product] PRIMARY KEY CLUSTERED

(

[ProductId] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

CREATE TABLE [dbo].[ProductType](

[ProductTypeId] [int] IDENTITY(1,1) NOT NULL,

[ProductTypeName] [nvarchar](50) NOT NULL,

CONSTRAINT [PK_ProductType] PRIMARY KEY CLUSTERED

(

[ProductTypeId] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

--clean up both tables

truncate table Product

delete from ProductType

I use "delete" sql statement against ProductType because you cannot use "truncate" when other tables have reference key to this one.

2) Create Visual Studio 2010 database project:

Run Visual Studio. Open server explorer. If server explorer is not visible at your screen, press Ctrl-W,L.

In server explorer add new connection to database. You will use that connection in database project.

Create new project by using "File->New->Project" menu option.

I am currently using SQL 2008, so I selected "SQL Server 2008 Database Project".

Next step is to import database schema into project. You need right mouse click on project and select "Import Database Objects and Settings".

In opened window select connection, which you created in server explorer.

When all database objects were imported you can create "Data Generation Plan".

After you finish previous step you will see list all of tables from database.

For better understanding how data will be generated in database, you should turn on data generation preview by right mouse clicking on table view and selecting "Preview Data Generation" from popup menu.

After you done all steps above, you should see screen like this:

case 1: ProductType table will contain randomly generated data

specify number of rows to be generated in every table and press F5 or use following menu:

Select connection string

In next dialog box select yes:

After data generation is complete you will see data generation result:

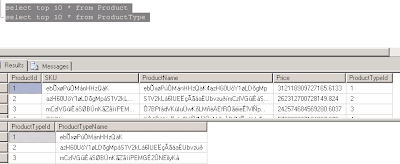

To check generated data in database, run following sql statements:

select top 10 * from Product

select top 10 * from ProductType

you will see following result:

case 1a: The smae as above but you need ratio between reference table and main table

For example: for every inserted row in ProductType you want generate 100 products.

To Achieve this task you need set

1. Related table

2. Ratio between main table and reference table

3. Number of rows in reference table

you will notice that every time you change number of rows for the "ProductType" table, number of rows for the "Product" table will be adjusted according ratio.

Run data generation by pressing F5. On prompt to delete existing data select "YES". After data generated check database.

case2: You have to use pre-defined refernce data

Let's say your web application uses predefined list of product types such as "Default", "Downloadable" and "Gift Certificate". In code you already created enumeration for that. It means that you cannot use randomly generated data in that table.

What I am recommending is to create SQL script, which you can run even after data deletion:

--clean up both tables

truncate table Product

delete from ProductType

--insert product types

set identity_insert ProductType on

insert into ProductType (ProductTypeId, ProductTypeName) values (1,'Default')

insert into ProductType (ProductTypeId,ProductTypeName) values (2, 'Downloadable')

insert into ProductType (ProductTypeId,ProductTypeName) values (3, 'Gift Certificate')

set identity_insert ProductType off

Next step is to update your data generation plan. First of all you have to set number of records to be generated to 0 for the table "ProductType". After that you should uncheck checkbox for the "ProductType" table and set related table to "None" as shown below.

You accomplished first task - rows will not be inserted into "ProductType" table.

Another task, which you need to achieve is to insert data in "Product" table "ProductTypeId" column and do not break foreign key constrain. Currently, "ProductTypeId" column is set up with "Foreign key" data generator, which works only when data is being inserted in reference table, but we turned off that option. You should select another generator. The best option is to use "Data bound generator". This type of generator can query data from another source, like database table and use different distribution mechanism:

There is another, similar, generator - "Sequential data bound generator". The difference is that "Data bound generator" makes sql query before start insert rows in a table one time and "Sequential data bound generator" makes sql query every time before it inserts row in a table.

Once you selected "Data bound generator" you should set its properties.

1. you need select connection string from dropdown list box in "Connection information". you can add new connection string right there if your refernce data located in another database. In our case you should select connection string, which we configured in "Server explorer".

2. you need to write sql statement to select "ProductTypeId" from "ProductType" table.

You can write statement like this "select * from ProductType" or like this "select ProductTypeId from ProductType". If you used first one you should select what field you are going to use from the result set in "Generator Output" as shown below:

3. you should also set seed value to 1 in our case.

4. Distribution - it is up to you

The configuration is done. Just start data generation by pressing F5. Here is very important thing, which you have to do on this screen - you should select "No".

If you don't do it, you will loose data in reference table and load data process will fail because of constraint violation. Finally, you should get following result:

As for price, you can update min and max value for that in "Money generator" for the "Price" column.